doi: 10.56294/ai202222

REVIEW

Medical Ethics in Terminal Patients: Dilemmas in the Use of AI for End-of-Life Decisions

Ética médica en pacientes terminales: Dilemas en el uso de IA para decisiones de fin de vida

Ana María Chaves Cano1 ![]() *

*

1Fundación Universitaria Juan N. Corpas. Bogotá, Colombia.

Cite as: Chaves Cano AM. Medical Ethics in Terminal Patients: Dilemmas in the Use of AI for End-of-Life Decisions. EthAIca. 2022; 1:22. https://doi.org/10.56294/ai202222

Submitted: 17-01-2022 Revised: 28-03-2022 Accepted: 15-05-2022 Published: 16-05-2022

Editor: PhD.

Rubén González Vallejo ![]()

Corresponding Author: Ana María Chaves Cano *

ABSTRACT

Medical ethics in terminal patients faces unprecedented challenges with the integration of artificial intelligence (AI) in end-of-life decision-making. This article aims to analyze the ethical dilemmas arising from the use of AI in this context by exploring its implications for autonomy, dignity, and the humanization of palliative care. To this end, a literature review was conducted of articles in Spanish and English indexed in Scopus between 2018 and 2022, selecting studies that addressed the intersection of AI, bioethics, and palliative medicine. The results were organized into four thematic axes: patient autonomy and informed consent, algorithmic biases and equity in recommendations, dehumanization versus optimization of care, and legal and moral responsibility in automated decisions. It was identified that, although AI can improve the accuracy of forecasts and treatments, its implementation requires ethical safeguards to prevent the reduction of the doctor-patient relationship to a technical process. The conclusions highlight the need for regulatory frameworks that balance technological innovation with bioethical principles, prioritizing human dignity and the active participation of patients and families in end-of-life decisions.

Keywords: Medical Ethics; Artificial Intelligence; Palliative Care; Patient Autonomy; End-of-Life Decisions.

RESUMEN

La ética médica en pacientes terminales enfrenta desafíos sin precedentes con la integración de la inteligencia artificial (IA) en la toma de decisiones de fin de vida. Este artículo tiene como objetivo analizar los dilemas éticos derivados del uso de IA en este contexto, al explorar sus implicaciones en la autonomía, dignidad y humanización del cuidado paliativo. Para ello, se realizó una revisión documental de artículos en español e inglés indexados en Scopus entre 2018 y 2022, se seleccionaron estudios que abordaran la intersección entre IA, bioética y medicina paliativa. Los resultados se organizaron en cuatro ejes temáticos: autonomía del paciente y consentimiento informado, sesgos algorítmicos y equidad en las recomendaciones, deshumanización versus optimización de cuidados, y responsabilidad legal y moral en decisiones automatizadas. Se identificó que,aunque la IA puede mejorar la precisión en pronósticos y tratamientos, su implementación requiere salvaguardas éticas para evitar la reducción de la relación médico-paciente a un proceso técnico. Las conclusiones destacan la necesidad de marcos regulatorios que equilibren innovación tecnológica con principios bioéticos, al priorizar la dignidad humana y la participación activa de pacientes y familias en las decisiones de fin de vida.

Palabras clave: Ética Médica, Inteligencia Artificial, Cuidados Paliativos, Autonomía Del Paciente, Decisiones de Fin de Vida.

INTRODUCTION

Palliative medicine and end-of-life care represent one of the most sensitive areas of clinical practice.(1,2) Traditionally, these decisions have been guided by bioethical principles such as autonomy, beneficence, non-maleficence, and justice.(3) However, advances in artificial intelligence (AI) in healthcare introduce new complexities, especially when predictive algorithms or clinical decision support systems are involved in end-of-life scenarios.(4,5)

AI promises to optimize diagnostic and prognostic processes in terminally ill patients by offering more accurate predictions about survival or responses to treatment.(6,7) However, its application in this context raises fundamental ethical dilemmas.(8) According to Gómez-Cano,(9) the automation of critical decisions could erode the doctor-patient relationship by reducing a deeply human moment to a probabilistic calculation. Furthermore, intrinsic biases in the training data of these systems threaten to perpetuate inequalities in access to quality palliative care.(10,11)

Another central challenge lies in the tension between technical efficiency and humanization.(12) While AI can streamline protocols or identify therapeutic options, its overuse could displace essential elements of palliative care, such as empathy, active listening, and adaptation to individual preferences.(13,14) Studies by Areia(15) and Zeng(16) warn of the risk that healthcare professionals may delegate decisions that require moral sensitivity to technology, especially in cultures where death remains taboo and families demand personalized treatment.

The legal and moral responsibility for using AI in end-of-life decisions is also uncharted territory.(6,9) The opacity of many AI systems makes it difficult to assess whether their conclusions meet ethical standards, requiring transparency and accountability mechanisms.(1,14) Added to this is the vulnerability of terminally ill patients, whose ability to consent to or question automated recommendations may be compromised by their clinical condition.(17)

This article arises from the urgent need to critically analyze these dilemmas at a time when AI is advancing without ethical and legal frameworks evolving at the same pace. Its objective is to examine the moral challenges of using AI in end-of-life decisions through a literature review that identifies the risks and opportunities for preserving human dignity in the care of terminally ill patients. In doing so, it seeks to contribute to the debate on integrating technology into palliative medicine without sacrificing its humanistic foundations.

METHOD

This study is based on a systematic review of the scientific literature on ethical dilemmas using AI for decision-making in terminally ill patients. This approach allows us to synthesize existing knowledge, identify trends, and critically analyze ethical debates in this emerging field. The review followed a structured protocol that ensured rigor in selecting, evaluating, and analyzing sources to provide a comprehensive perspective. The review was carried out in previously defined stages, adding scientific rigor and robustness to the information search and selection process.

Definition of search criteria

Keywords in Spanish and English related to medical ethics, AI, palliative care, and end-of-life decisions were established. The search was limited to articles published between 2018 and 2022 in the Scopus database, selected for its broad coverage of scientific literature in health sciences and bioethics.

Initial collection and selection of documents

Filters were applied to include only peer-reviewed articles, book chapters, and systematic reviews. After an initial review of titles and abstracts, publications that did not directly address the intersection between AI and ethics in terminally ill patients were discarded.

Quality and relevance assessment

The preselected documents were analyzed in depth to determine their theoretical and methodological relevance. Studies presenting empirical evidence, solid conceptual frameworks, or well-founded ethical discussions were prioritized.

Extraction and categorization of information

Relevant data were organized around four predefined themes: patient autonomy, algorithmic biases, humanization of care, and legal responsibility. This classification allowed for a comparative analysis of the ethical positions identified in the literature.

Synthesis and critical analysis

Finally, the findings were integrated to construct a coherent discussion. This allowed for the identification of perspectives and the highlighting of consensus and controversy in the field.

This methodological approach ensured a comprehensive and well-founded review, which facilitated the identification of gaps in the literature and future projections on the ethical use of AI in palliative medicine.(19,20) The systematization of the process, from search to analysis, helped to minimize bias and ensure the validity of the conclusions obtained.

RESULTS

The literature review identified that incorporating artificial intelligence in end-of-life decision-making raises profound ethical tensions, particularly around preserving human dignity, patient autonomy, and equity in access to palliative care. While AI offers valuable tools for improving diagnostic and prognostic accuracy, its implementation in terminal contexts requires critical reflection on its moral and practical implications. The findings were organized into four thematic areas, ranging from challenges in informed consent to the risks of dehumanization in palliative care. These themes are developed below, integrating theoretical perspectives and current debates in bioethics and medicine.

Patient autonomy and informed consent

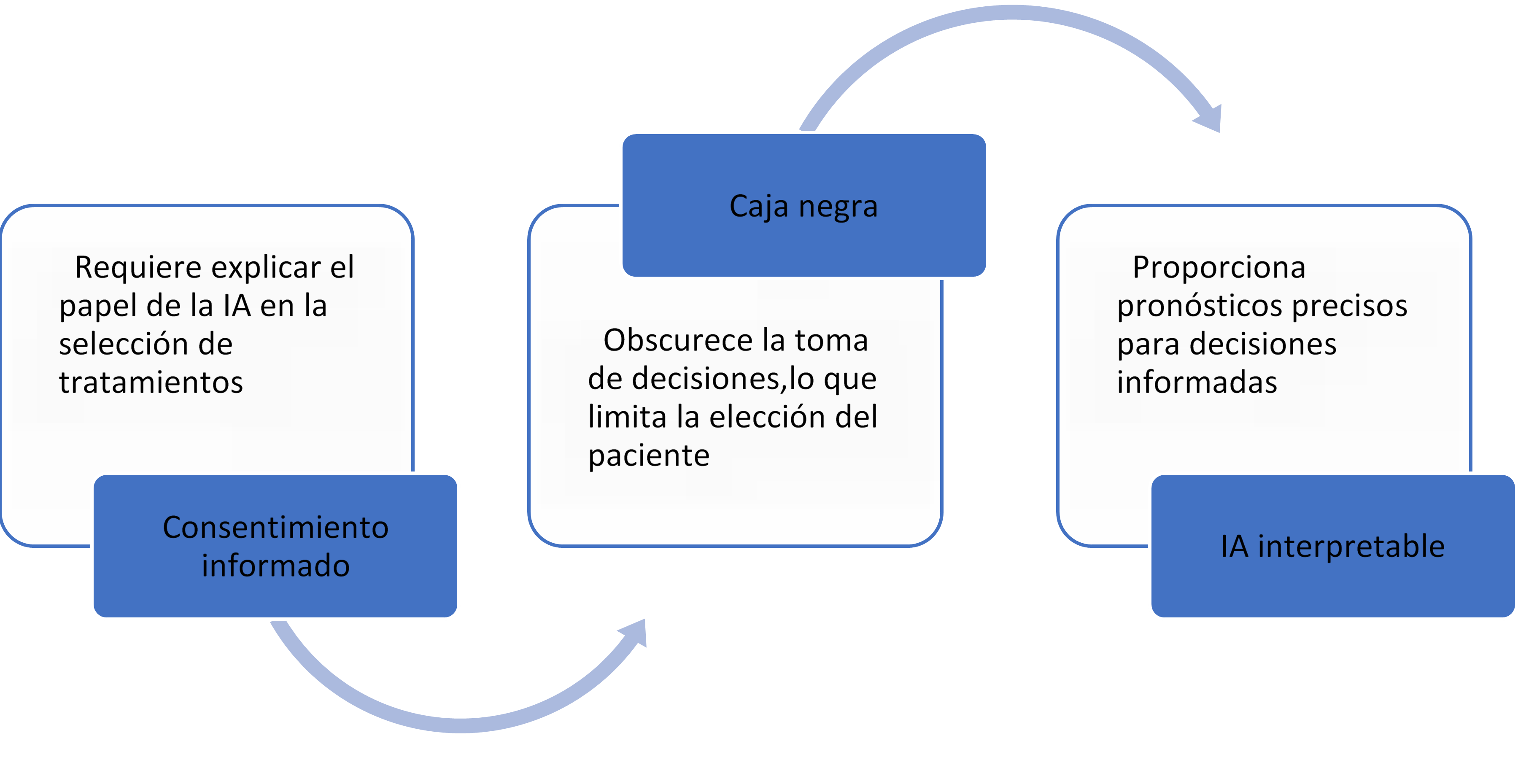

The principle of autonomy, fundamental in medical ethics, faces new challenges as AI systems influence clinical decisions.(21) In terminally ill patients, where decision-making capacity may be compromised by physical or cognitive decline, transparency in the functioning of algorithms becomes critical.(12,22) However, many AI models operate as “black boxes,” making it difficult for physicians, patients, and families to understand how recommendations are generated (figure 1).(23)

Figure 1. Medical ethics requirements in AI

On the other hand, informed consent in this context requires explaining the benefits and risks of treatment and the role that AI plays in its selection. Shlobin points out that, in many cases, patients are unaware that their palliative care plans have been guided by algorithms, which calls into question the ethical validity of their acceptance. In addition, the technical complexity of these systems can create a gap in understanding, where healthcare professionals act as intermediaries without fully mastering the fundamentals of AI.

The literature also discusses whether AI could strengthen autonomy by providing more accurate prognoses that allow patients to make better-informed decisions. However, this depends on systems designed to prioritize interpretability and medical teams receiving training in ethical communication about emerging technologies.

An additional debate revolves around the increased vulnerability of terminally ill patients. Their emotional and physical dependence makes them more susceptible to accepting automated recommendations without question, especially in healthcare systems with time and human resource constraints. This calls for protocols that ensure the active participation of patients and their families in AI-mediated decisions.(30,31)

Algorithmic biases and fairness in recommendations

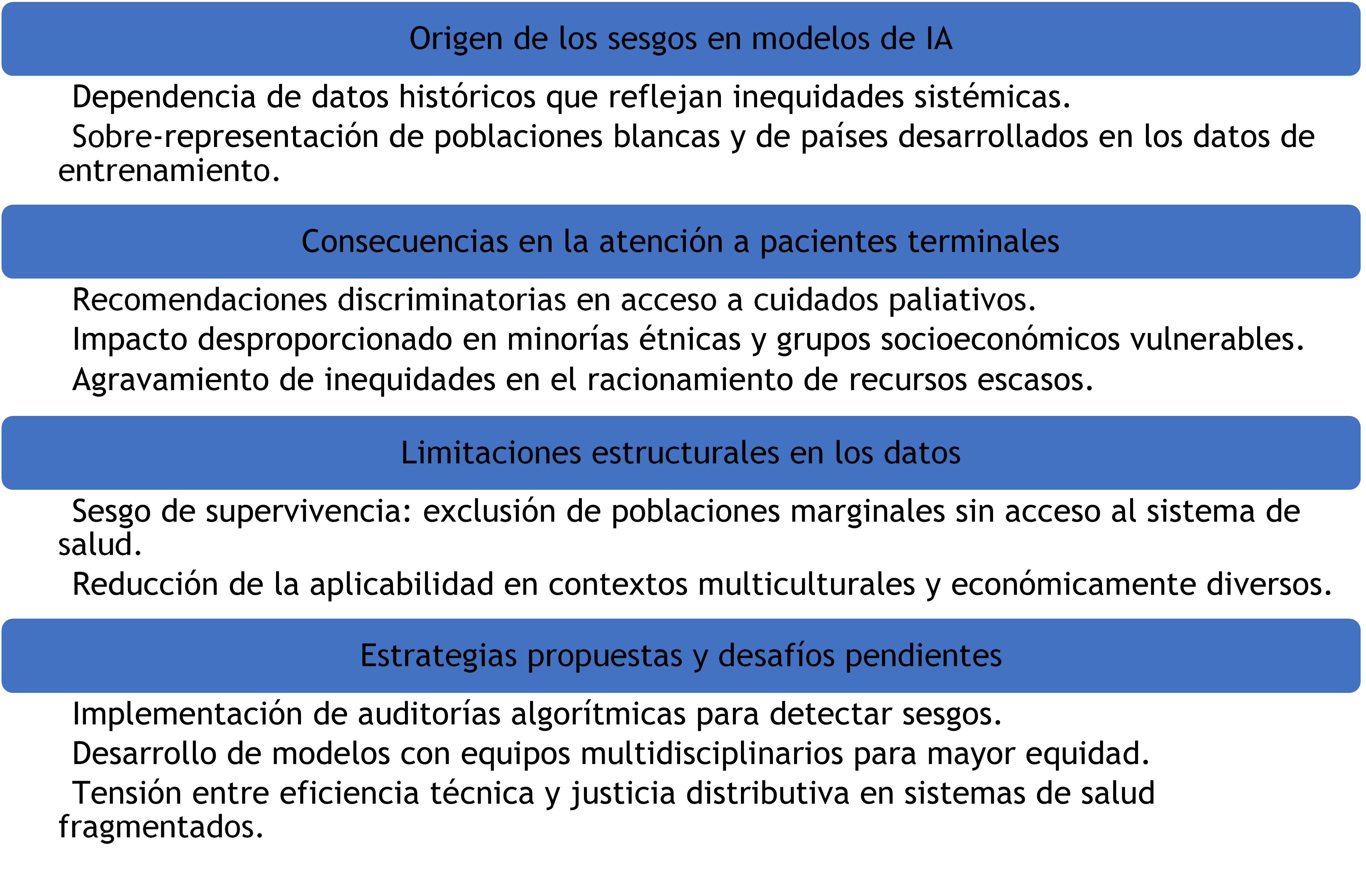

The reliance on AI on historical data to train its models introduces risks of systemic biases that can perpetuate inequalities in the care of terminally ill patients (figure 2).(12) Convie et al.(32) show that algorithms used in survival prognoses are often based on predominantly white populations in developed countries, which reduces their accuracy in minority groups or those with less access to healthcare.

Figure 2. Origin and risks associated with algorithmic biases

These biases can translate into discriminatory recommendations, such as underestimating the need for palliative care in patients of certain ethnicities or socioeconomic conditions. This problem is exacerbated when AI is used to ration scarce services, such as intensive care beds or expensive therapies.

The literature also warns about "survival bias," as the available data often comes from patients who have accessed the healthcare system, excluding those in marginalized situations. This limits AI's ability to offer equitable solutions in culturally and economically diverse settings.

Strategies such as algorithmic auditing and including multidisciplinary teams in developing these tools have been proposed in response. However, the challenge of balancing technical efficiency with distributive justice remains, especially in fragmented health systems.

Dehumanization versus optimization of care

The use of AI in end-of-life care poses a fundamental paradox. While it can optimize technical aspects of palliative care, it also threatens to depersonalize the doctor-patient relationship.(37) The literature reviewed highlights that palliative medicine is based on humanistic dimensions that algorithms cannot replicate. Qualitative studies reveal that patients and families perceive AI as cold when it replaces meaningful clinical interactions, especially during high emotional stress.

However, some authors argue that AI could free up time for humanized care by automating routine administrative or diagnostic tasks.(39) However, this potential depends on health systems prioritizing person-centered care models rather than reducing costs through indiscriminate automation.(33,34)

A critical risk identified is the illusion of objectivity. By relying on algorithmic recommendations perceived as "emotion-free," professionals may underestimate crucial contextual aspects in end-of-life decisions. Documented cases show how algorithms have recommended discontinuing treatments without considering their symbolic value to families in the grieving process.

The literature also explores the role of AI in communicating bad news. Although chatbots or virtual avatars have been tested to inform terminal diagnoses, their use is controversial. While some defend their accuracy in transmitting clinical information, others emphasize that human presence is irreplaceable in alleviating suffering.

AI should be integrated as a support tool, never as a substitute for ethical clinical judgment. Protocols are needed to define its applications and ensure that technology does not overshadow the human dimension of palliative care.

Legal and moral responsibility in automated decisions

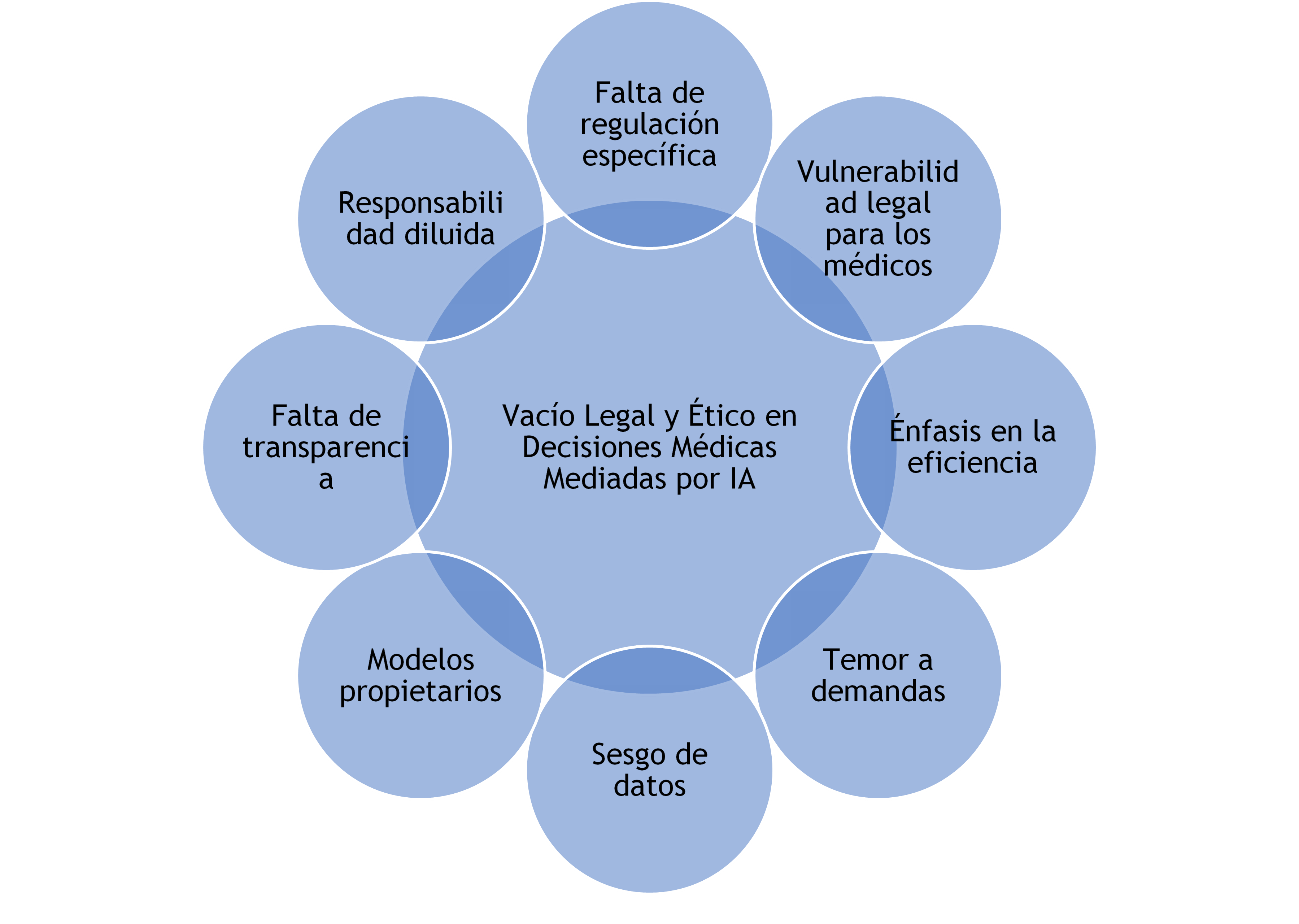

The accountability of AI-mediated decisions in terminal contexts constitutes a legal and ethical gap.(28,33) When an algorithm recommends an action that leads to the premature death of a patient, the chain of responsibility is diluted among developers, medical institutions, and professionals.(45) The review identified that current regulatory frameworks do not adequately address this problem, leaving physicians in a position of legal vulnerability (figure 3).(46)

followed algorithmic recommendations against their convictions for fear of lawsuits.(49)

Figure 3. Ethical and Legal Challenges in AI-Mediated Medical Decisions

A central debate revolves around the opacity of proprietary algorithms. Technology companies often protect their models as trade secrets, making it impossible to assess whether a recommendation was based on solid evidence or biased data.(47) This contradicts bioethical principles of transparency and accountability, which are especially critical when decisions affect life or death.(37)

The literature also analyzes conflicts in virtue ethics. While traditional palliative medicine values prudence and compassion, AI operates based on efficiency and probability. This clash can lead doctors to prioritize compliance with automated protocols over their moral judgment. Real-life cases illustrate how professionals have.

DISCUSSION

The findings of this review reveal a fundamental tension between the ability of artificial intelligence to optimize medical processes and its potential to erode essential aspects of palliative care. While AI-based systems can improve prognostic accuracy and standardize protocols, their implementation without adequate ethical safeguards threatens to transform deeply human decisions into depersonalized technical exercises.(50,51) This paradox is particularly critical in the context of terminally ill patients, where the quality of care is measured not only by clinical outcomes but also by the preservation of dignity and respect for individual preferences.(15,18)

A worrying finding is how biases inherent in training data can perpetuate inequalities in access to quality palliative care. The literature shows how algorithms developed with majority populations often fail to meet the needs of minority or marginalized groups, requiring more rigorous auditing mechanisms.(45,52) Furthermore, the opacity of many commercial systems makes it difficult to assess whether their recommendations are consistent with principles of equity, posing serious challenges for distributive justice in health.(39)

The analysis also highlights how the growing reliance on algorithmic tools could alter the very nature of the clinical relationship in end-of-life contexts. There is a risk that medical professionals, under pressure to adopt emerging technologies, will delegate to AI systems judgments that require moral sensitivity and contextual understanding.(32,41) This is particularly problematic because automated recommendations do not consider cultural, spiritual, or emotional dimensions central to patients and families in end-of-life processes.(44,53)

Finally, the review identifies an urgent need to develop specific regulatory frameworks that address the ethical particularities of AI use in palliative medicine. These must balance technological innovation with the protection of fundamental values, clearly establishing limits on automating critical decisions and mechanisms for algorithmic transparency.(52,54) The challenge is to harness the advantages of AI without sacrificing the humanistic principles that have traditionally guided the care of terminally ill patients so that human beings always remain at the center of the care process.(55)

CONCLUSIONS

The analysis shows that implementing AI in decision-making for terminally ill patients requires a delicate balance between technological innovation and the preservation of fundamental ethical principles. While these systems can optimize technical aspects of palliative care, their use must be strictly regulated to ensure patient autonomy, avoid algorithmic bias, maintain the humanization of care, and clarify legal and moral responsibilities. It is concluded that AI should function as a support tool—never as a substitute—for clinical judgment and the humanistic values that guide end-of-life care, requiring specific ethical frameworks and professional training for its responsible use.

The research reveals an urgent need to develop protocols prioritizing algorithmic transparency, equity in access, and the active participation of patients and families to ensure that technology improves—not erodes—the quality of person-centered palliative care. Future studies should explore the perceptions of terminally ill patients themselves about these technologies, a critical aspect that has been little researched to date.

REFERENCES

1. Garetto F, Cancelli F, Rossi R, Maltoni M. Palliative Sedation for the Terminally Ill Patient. CNS Drugs 2018;32(10):951-961. https://doi.org/10.1007/s40263-018-0576-7

2. Tatum P, Mills S. Hospice and Palliative Care: An Overview. Medical Clinics of North America 2020;104(3):359-373. https://doi.org/10.1016/j.mcna.2020.01.001

3. Rosenberg L, Fishel A, Harley R, Stern T, Emanuel L, Cohen J. Psychological Dimensions of Palliative Care Consultation: Approaches to Seriously Ill Patients at the End of Life. Journal of Clinical Psychiatry 2022;83(2):22ct14391. https://doi.org/10.4088/JCP.22ct14391

4. Gómez Cano CM, Valencia Arias JA. Mechanisms used to measure technological innovation capabilities in organizations: results from a bibliometric analysis. Revista Guillermo de Ockham 2020;18(1):69-79. https://doi.org/10.21500/22563202.4550

5. Quinn K, Wegier P, Stukel T, Huang A, Bell C, Tanuseputro P. Comparison of Palliative Care Delivery in the Last Year of Life Between Adults With Terminal Noncancer Illness or Cancer. JAMA Network Open 2021;4(3):e210677. https://doi.org/10.1001/jamanetworkopen.2021.0677

6. Heydari H, Hojjat-Assari S, Almasian M, Pirjani P. Exploring health care providers’ perceptions about home-based palliative care in terminally ill cancer patients. BMC Palliative Care 2019;18:66. https://doi.org/10.1186/s12904-019-0452-3

7. Gómez CA, Sánchez V, Fajardo MY. Projects and their dimensions: a conceptual approach. Contexto 2018;7:57-64. http://contexto.ugca.edu.co

8. Anandarajah G, Mennillo H, Rachu G, Harder T, Ghosh J. Lifestyle Medicine Interventions in Patients With Advanced Disease Receiving Palliative or Hospice Care. American Journal of Lifestyle Medicine 2019;14(3):243-257. https://doi.org/10.1177/1559827619830049

9. Gómez-Cano C, Sánchez V, Tovar G. Endogenous factors causing irregular permanence: a reading from teaching practice. Educación y Humanismo 2018;20(35):96-112. http://dx.10.17081/eduhum.20.35.3030

10. Coeckelbergh M. AI for climate: freedom, justice, and other ethical and political challenges. AI and Ethics 2020;1(1):67-72. https://doi.org/10.1007/s43681-020-00007-2

11. Simon S, Pralong A, Radbruch L, Bausewein C, Voltz R. The Palliative Care of Patients With Incurable Cancer. Deutsches Ärzteblatt International 2020;116(7):108-115. https://doi.org/10.3238/arztebl.2020.0108

12. Frasca M, Galvin A, Raherison C, Soubeyran P, Burucoa B, Bellera C, Mathoulin-Pélissier S. Palliative versus hospice care in patients with cancer: a systematic review. BMJ Supportive & Palliative Care 2020;11(2):188-199. https://doi.org/10.1136/bmjspcare-2020-002195

13. Kim C, Kim S, Lee K, Choi J, Kim S. Palliative Care for Patients With Heart Failure. Journal of Hospice and Palliative Nursing 2022;24(3):E151-E158. https://doi.org/10.1097/NJH.0000000000000869

14. Gómez-Monroy CA, Cuesta-Ramírez DH, Gómez-Cano CA. Analysis of the perception of tax on the consumption of plastic bags charged in large and medium-sized establishments from the center of Florence. LOGINN Investigación Científica y Tecnológica 2021;5(1). https://dx.doi.org/10.23850/25907441.4320

15. Areia N, Gongóra J, Major S, Oliveira V, Relvas A. Support interventions for families of people with terminal cancer in palliative care. Palliative and Supportive Care 2020;18(5):580-588. https://doi.org/10.1017/S1478951520000127

16. Zeng Y, Wang C, Ward K, Hume A. Complementary and Alternative Medicine in Hospice and Palliative Care: A Systematic Review. Journal of Pain and Symptom Management 2018;56(5):781-794.e4. https://doi.org/10.1016/j.jpainsymman.2018.07.016

17. Strang P. Palliative oncology and palliative care. Molecular Oncology 2022;16(19):3399-3409. https://doi.org/10.1002/1878-0261.13278

18. Guayara Cuéllar CT, Millán Rojas EE, Gómez Cano CA. Design of a virtual digital literacy course for teachers at the University of Amazonia. Revista Científica 2019;(34):34-48. https://doi.org/10.14483/23448350.13314

19. Hui D, Bruera E. Models of Palliative Care Delivery for Patients With Cancer. Journal of Clinical Oncology 2020;38(9):852-865. https://doi.org/10.1200/JCO.18.02123

20. Rigue A, Monteiro D. Difficulties of nursing professionals in the care management of cancer patients in palliative care. Research, Society and Development 2020;9(10):e9109073. https://doi.org/10.33448/rsd-v9i10.9073

21. Gómez-Cano C, Sánchez-Castillo V. Evaluation of the maturity level in project management of a public services company. Económicas CUC 2021;42(2):133-144. https://doi.org/10.17981/econcuc.42.2.2021.Org.7

22. Wong-Parodi G, Mach K, Jagannathan K, Sjostrom K. Insights for developing effective decision support tools for environmental sustainability. Current Opinion in Environmental Sustainability 2020;42:52-59. https://doi.org/10.1016/j.cosust.2020.01.005

23. Lanini I, Samoni S, Husain-Syed F, Fabbri S, Canzani F, Messeri A, et al. Palliative Care for Patients with Kidney Disease. Journal of Clinical Medicine 2022;11(13):3923. https://doi.org/10.3390/jcm11133923

24. Pickering J. Trust, but Verify: Informed Consent, AI Technologies, and Public Health Emergencies. Future Internet 2021;13(5):132. https://doi.org/10.3390/fi13050132

25. Shlobin N, Sheldon M, Lam S. Informed consent in neurosurgery: a systematic review. Neurosurgical Focus 2020;49(5):E6. https://doi.org/10.3171/2020.8.FOCUS20611

26. Mena-Vázquez N, Gomez-Cano C, Perez-Albaladejo L, Manrique-Arija S, Romero-Barco C, Aguilar-Hurtado C, et al. Prospective follow-up of a cohort of patients with interstitial lung disease associated with rheumatoid arthritis in treatment with DMARD. Annals of the Rheumatic Diseases 2018;77(Suppl 2):585. https://doi.org/10.1136/annrheumdis-2018-eular.1935

27. Glaser J, Nouri S, Fernández A, Sudore R, Schillinger D, Klein-Fedyshin M, Schenker Y. Interventions to Improve Patient Comprehension in Informed Consent for Medical and Surgical Procedures: An Updated Systematic Review. Medical Decision Making 2020;40(2):119-143. https://doi.org/10.1177/0272989X19896348

28. Resnik D. Informed Consent, Understanding, and Trust. American Journal of Bioethics 2021;21(5):61-63. https://doi.org/10.1080/15265161.2021.1906987

29. Ulibarri N, Ajibade I, Galappaththi E, Joe E, Lesnikowski A, Mach K, et al. A global assessment of policy tools to support climate adaptation. Climate Policy 2022;22(1):77-96. https://doi.org/10.1080/14693062.2021.2002251

30. Rodríguez-Torres E, Gómez-Cano C, Sánchez-Castillo V. Management information systems and their impact on business decision making. Data and Metadata 2022;1:21. https://doi.org/10.56294/dm202221

31. Sherman K, Kilby C, Pehlivan M, Smith B. Adequacy of measures of informed consent in medical practice: A systematic review. PLoS ONE 2021;16(5):e0251485. https://doi.org/10.1371/journal.pone.0251485

32. Convie L, Carson E, McCusker D, McCain R, McKinley N, Campbell W, et al. The patient and clinician experience of informed consent for surgery: a systematic review of the qualitative evidence. BMC Medical Ethics 2020;21:58. https://doi.org/10.1186/s12910-020-00501-6

33. Algu K. Denied the right to comfort: Racial inequities in palliative care provision. EClinicalMedicine 2021;34:100833. https://doi.org/10.1016/j.eclinm.2021.100833

34. Cloyes K, Candrian C. Palliative and End-of-Life Care for Sexual and Gender Minority Cancer Survivors: a Review of Current Research and Recommendations. Current Oncology Reports 2021;23:146. https://doi.org/10.1007/s11912-021-01034-w

35. Berkman C, Stein G. Experiences of LGBT Patients and Families With Hospice and Palliative Care: Perspectives of the Palliative Care Team. Innovation in Aging 2020;4(Suppl 1):67. https://doi.org/10.1093/geroni/igaa057.218

36. Koffman J, Bajwah S, Davies J, Hussain J. Researching minoritised communities in palliative care: An agenda for change. Palliative Medicine 2022;36(4):530-542. https://doi.org/10.1177/02692163221132091

37. De Panfilis L, Peruselli C, Tanzi S, Botrugno C. AI-based clinical decision-making systems in palliative medicine: ethical challenges. BMJ Supportive & Palliative Care 2023;13(2):183-189. https://doi.org/10.1136/bmjspcare-2021-002948

38. Gajra A, Zettler M, Miller K, Frownfelter J, Showalter J, Valley A, et al. Impact of Augmented Intelligence on Utilization of Palliative Care Services in a Real-World Oncology Setting. JCO Oncology Practice 2022;18(2):e80-e88. https://doi.org/10.1200/OP.21.00179

39. Guzmán DL, Gómez-Cano C, Sánchez-Castillo V. State building through citizen participation. Revista Academia & Derecho 2022;14(25). https://doi.org/10.18041/2215-8944/academia.25.10601

40. Greer J, Moy B, El-Jawahri A, Jackson V, Kamdar M, Jacobsen J, et al. Randomized Trial of a Palliative Care Intervention to Improve End-of-Life Care Discussions in Patients With Metastatic Breast Cancer. Journal of the National Comprehensive Cancer Network 2022;20(2):136-143. https://doi.org/10.6004/jnccn.2021.7040

41. Harasym P, Brisbin S, Afzaal M, Sinnarajah A, Venturato L, Quail P, et al. Barriers and facilitators to optimal supportive end-of-life palliative care in long-term care facilities: a qualitative descriptive study of community-based and specialist palliative care physicians’ experiences, perceptions and perspectives. BMJ Open 2020;10(8):e037466. https://doi.org/10.1136/bmjopen-2020-037466

42. Pérez-Gamboa AJ, Gómez-Cano C, Sánchez-Castillo V. Decision making in university contexts based on knowledge management systems. Data and Metadata 2022;2:92. https://doi.org/10.56294/dm202292

43. Ziegler L, Craigs C, West R, Carder P, Hurlow A, Millares-Martin P, et al. Is palliative care support associated with better quality end-of-life care indicators for patients with advanced cancer? A retrospective cohort study. BMJ Open 2018;8(1):e018284. https://doi.org/10.1136/bmjopen-2017-018284

44. Sleeman K, Timms A, Gillam J, Anderson J, Harding R, Sampson E, Evans C. Priorities and opportunities for palliative and end of life care in United Kingdom health policies: a national documentary analysis. BMC Palliative Care 2021;20:171. https://doi.org/10.1186/s12904-021-00802-6

45. Evans C, Ison L, Ellis-Smith C, Nicholson C, Costa A, Oluyase A, et al. Service Delivery Models to Maximize Quality of Life for Older People at the End of Life: A Rapid Review. Milbank Quarterly 2019;97(1):113-175. https://doi.org/10.1111/1468-0009.12373

46. Parviainen J, Rantala J. Chatbot breakthrough in the 2020s? An ethical reflection on the trend of automated consultations in health care. Medicine, Health Care and Philosophy 2022;25(1):61-71. https://doi.org/10.1007/s11019-021-10049-w

47. Verdicchio M, Perin A. When Doctors and AI Interact: on Human Responsibility for Artificial Risks. Philosophy & Technology 2022;35:11. https://doi.org/10.1007/s13347-022-00506-6

48. Mykhailov D. A moral analysis of intelligent decision-support systems in diagnostics through the lens of Luciano Floridi’s information ethics. Human Affairs 2021;31(2):149-164. https://doi.org/10.1515/humaff-2021-0013

49. Erkal E, Akpınar A, Erkal H. Ethical evaluation of artificial intelligence applications in radiotherapy using the Four Topics Approach. Artificial Intelligence in Medicine 2021;115:102055. https://doi.org/10.1016/J.ARTMED.2021.102055

50. Pérez Gamboa AJ, García Acevedo Y, García Batán J. Life project and university training process: an exploratory study at the University of Camagüey. Transformación 2019;15(3):280-296. http://scielo.sld.cu/scielo.php?script=sci_arttext&pid=S2077-29552019000300280

51. Lang B. Are physicians requesting a second opinion really engaging in a reason-giving dialectic? Normative questions on the standards for second opinions and AI. Journal of Medical Ethics 2022;48(4):234-235. https://doi.org/10.1136/medethics-2022-108246

52. Naik N, Hameed B, Shetty D, Swain D, Shah M, Paul R, et al. Legal and Ethical Consideration in Artificial Intelligence in Healthcare: Who Takes Responsibility? Frontiers in Surgery 2022; 9:862322. https://doi.org/10.3389/fsurg.2022.862322

53. McDonald J, Graves J, Abrahams N, Thorneycroft R, Hegazi I. Moral judgement development during medical student clinical training. BMC Medical Education 2021; 21:585. https://doi.org/10.1186/s12909-021-02572-4

54. De Campos Freitas C, Osório F. Moral judgment and hormones: A systematic literature review. PLoS ONE 2022;17(4):e0265693. https://doi.org/10.1371/journal.pone.0265693

55. Quek C, Ong R, Wong R, Chan S, Chok A, Shen G, et al. Systematic scoping review on moral distress among physicians. BMJ Open 2022;12(8):e064029. https://doi.org/10.1136/bmjopen-2022-064029

FUNDING

None.

CONFLICT OF INTEREST

The authors declare that there is no conflict of interest.

AUTHOR CONTRIBUTION

Conceptualization: Ana María Chaves Cano.

Data curation: Ana María Chaves Cano.

Formal analysis: Ana María Chaves Cano.

Fund acquisition: Ana María Chaves Cano.

Research: Ana María Chaves Cano.

Methodology: Ana María Chaves Cano.

Project management: Ana María Chaves Cano.

Resources: Ana María Chaves Cano.

Software: Ana María Chaves Cano.

Supervision: Ana María Chaves Cano.

Validation: Ana María Chaves Cano.

Visualization: Ana María Chaves Cano.

Writing – original draft: Ana María Chaves Cano.

Writing – review and editing: Ana María Chaves Cano.