REVIEW

AI and Climate Justice: Ethical Risks of Predictive Models in Environmental Policies

IA y justicia climática: Riesgos éticos de los modelos predictivos en políticas ambientales

Tulio Andres Clavijo

Gallego1 ![]() *

*

1Universidad del Cauca. Popayan. Colombia.

Cite as: Clavijo Gallego TA. AI and Climate Justice: Ethical Risks of Predictive Models in Environmental Policies. EthAIca. 2023; 2:51. https://doi.org/10.56294/ai202351

Submitted: 17-04-2022 Revised: 28-08-2022 Accepted: 22-12-2022 Published: 01-01-2023

Editor: PhD.

Rubén González Vallejo ![]()

Corresponding author: Tulio Andres Clavijo Gallego *

ABSTRACT

The increasing implementation of predictive artificial intelligence (AI) models in environmental policies poses critical challenges for climate justice, particularly concerning equity and the rights of vulnerable communities. This article analyzes the ethical risks associated with the use of AI in environmental decision-making by examining how these systems can perpetuate existing inequalities or generate new forms of exclusion. Through a systematic literature review of articles in Spanish and English indexed in Scopus between 2018 and 2022, four central thematic axes were identified: algorithmic biases and territorial discrimination, opacity in climate governance, displacement of political responsibilities, and exclusion of local knowledge in predictive models. The results reveal that, although AI can optimize the management of natural resources and mitigate climate change, its application without ethical regulation tends to favor actors with greater technological and economic power, marginalizing populations historically affected by the environmental crisis. It is concluded that it is necessary to develop governance frameworks that prioritize algorithmic transparency, community participation, and accountability to ensure that AI-based solutions do not deepen existing climate injustices.

Keywords: Artificial Intelligence; Climate Justice; Algorithmic Biases; Environmental Governance; Public Policies.

RESUMEN

La creciente implementación de modelos predictivos de inteligencia artificial (IA) en políticas ambientales plantea desafíos críticos para la justicia climática, especialmente en lo relativo a la equidad y los derechos de comunidades vulnerables. Este artículo analiza los riesgos éticos asociados con el uso de IA en la toma de decisiones ambientales, al examinar cómo estos sistemas pueden perpetuar desigualdades existentes o generar nuevas formas de exclusión. Mediante una revisión documental sistemática de artículos en español e inglés indexados en Scopus entre 2018 y 2022, se identificaron cuatro ejes temáticos centrales: sesgos algorítmicos y discriminación territorial, opacidad en la gobernanza climática, desplazamiento de responsabilidades políticas, y exclusión de saberes locales en modelos predictivos. Los resultados revelan que, aunque la IA puede optimizar la gestión de recursos naturales y la mitigación del cambio climático, su aplicación sin regulación ética tiende a favorecer a actores con mayor poder tecnológico y económico, lo que margina a poblaciones históricamente afectadas por la crisis ambiental. Se concluye que es necesario desarrollar marcos de gobernanza que prioricen la transparencia algorítmica, la participación comunitaria y la rendición de cuentas, para asegurar que las soluciones basadas en IA no profundicen las injusticias climáticas existentes.

Palabras clave: Inteligencia Artificial; Justicia Climática; Sesgos Algorítmicos; Gobernanza Ambiental; Políticas Públicas.

INTRODUCTION

The global climate crisis has prompted governments and organizations to adopt advanced technological tools to design more effective environmental policies.(1,2) Among these, artificial intelligence (AI) predictive models have gained prominence for their ability to analyze large volumes of climate data, predict future scenarios, and optimize resource allocation.(3) However, this growing reliance on algorithms raises fundamental ethical questions, particularly their impact on climate justice.(4,5) While AI promises technical solutions to complex problems, its implementation can reproduce or even exacerbate structural inequalities, disproportionately affecting already vulnerable communities.(6)

A critical issue is how intrinsic biases in training data and algorithmic design can distort environmental policy priorities.(7,8) Recent studies show how predictive models used to manage water resources or predict natural disasters are often based on information from developed regions, limiting their applicability in contexts with different socio-ecological realities.(4,9) This representativeness gap can lead to environmental interventions ignoring the needs of marginalized populations, thereby deepening historical patterns of exclusion.(10)

Furthermore, the opacity of many AI systems makes it difficult to assess whether their recommendations reinforce unequal power dynamics.(11)

As an ethical-political framework, climate justice requires that environmental solutions consider not only technical efficiency but also the equitable distribution of their benefits and burdens.(12,13) AI introduces tensions between scalability and contextualization in this sense: while algorithms seek to generalize patterns, climate injustices are inherently local and specific.(14,15)

Another ethical challenge lies in the governance of these systems. Technology corporations’ growing privatization of predictive tools concentrates decision-making power on actors with commercial interests, marginalizing local communities in processes that directly affect them. This dynamic calls into question basic democratic principles of participation and transparency, which are essential for legitimate and effective environmental policies.(2,7) Furthermore, using AI as a technological solution can allow governments to evade political responsibilities by attributing complex decisions to systems presented as neutral and objective.(18)

There is a palpable risk that predictive models will render invisible traditional and local knowledge that has proven crucial for climate adaptation in many regions.(19) By privileging quantifiable data over ancestral knowledge, these systems can erode sustainable practices developed over generations, replacing them with standardized but culturally inappropriate approaches.(13,20)

This article arises from the urgent need to critically examine how AI has reshaped the field of environmental policy and its implications for climate justice. Its objective is to analyze the main ethical risks associated with using predictive models in this area, identifying the challenges and opportunities for developing more equitable and participatory governance frameworks. In doing so, it seeks to contribute to the debate on how to harness the potential of AI without sacrificing the fundamental principles of equity and environmental justice.

METHOD

This study is based on a systematic review of the academic literature on applying AI predictive models in environmental policy and their relationship to climate justice. Adopting a qualitative approach, the research critically analyzed indexed scientific publications to identify patterns, tensions, and gaps in the ethical debate on this emerging issue.(21,22) The methodological process was structured in five rigorous stages that ensured the comprehensiveness and validity of the findings.

The first stage consisted of defining search criteria, where Boolean equations were established by combining key terms such as “artificial intelligence,” “climate justice,” “environmental ethics,” and “public policy.” The search was limited to documents published between 2018 and 2022 in the Scopus and Web of Science databases, with priority given to peer-reviewed articles in Spanish and English. In the second stage of document preselection, thematic relevance filters were applied by analyzing titles, abstracts, and keywords, and works that did not directly address the intersection between AI and climate justice were discarded.

The third stage involved a comprehensive content analysis, where the selected documents were examined using thematic coding to identify emerging categories. This process allowed the literature to be organized into four principal analytical axes that structure the results. Subsequently, in the fourth stage of methodological triangulation, academic sources were contrasted with technical reports from international organizations and relevant case studies, enriching the analysis with multilevel perspectives.

Finally, the fifth stage consisted of interpretive synthesis, where the findings were integrated to construct a coherent analytical framework on the ethical risks of AI in environmental policies. This approach made it possible to overcome the limitations of traditional reviews by incorporating both technical and socio-political dimensions of the problem. The methodology ensured the identification of trends in the literature and the revelation of contradictions and critical gaps in this emerging field of study.

RESULTS

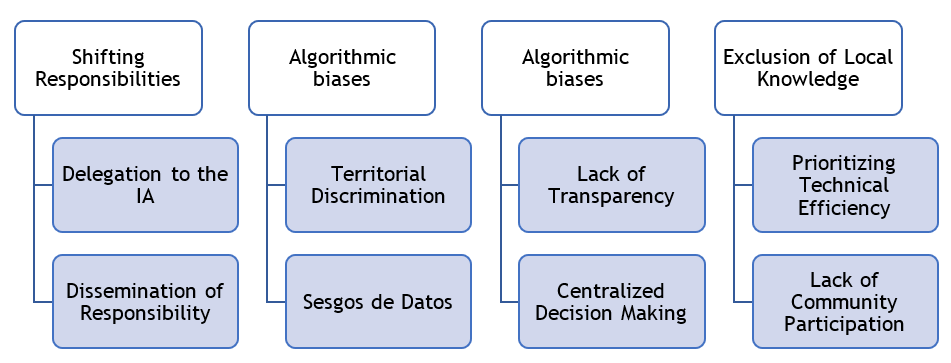

A critical review of the literature identified that implementing AI predictive models in environmental policies is not a neutral process but reproduces and amplifies dynamics of socio-ecological inequality. The studies analyzed show that, despite their potential to optimize natural resource management, these systems tend to operate under technocratic logics that marginalize vulnerable communities and prioritize economic or geopolitical interests. Based on this diagnosis, four central themes emerged that structure the ethical risks identified: algorithmic biases and territorial discrimination, opacity in climate governance, displacement of political responsibilities, and exclusion of local knowledge. These themes reflect tensions between the dominant technical efficiency paradigm in AI and the principles of climate justice, which demand equity, transparency, and participation in environmental decision-making (see figure 1).

Figure 1. Ethical risks in AI for environmental policies

Algorithmic biases and territorial discrimination

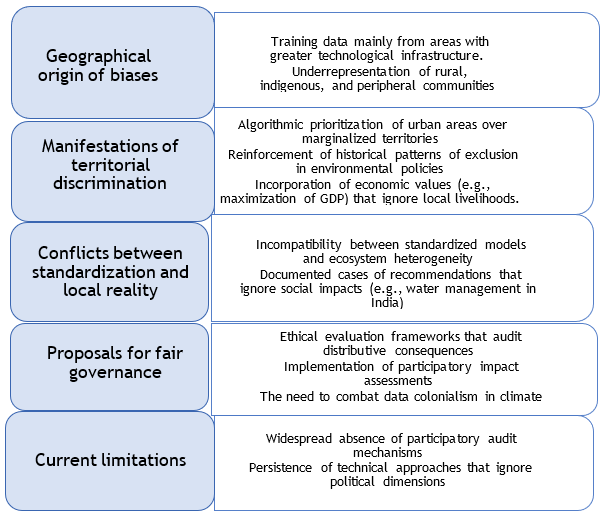

The literature reviewed highlights that AI predictive models used in environmental policies are often biased toward specific geographic and socioeconomic realities, leading to systemic territorial discrimination (see figure 2).(23,24) These biases originate in selecting training data from regions with greater technological infrastructure, leaving out rural, indigenous, or peripheral communities.(25)

As a result, algorithmic recommendations reinforce historical patterns of exclusion, such as prioritizing the protection of urban areas over marginalized territories with less representation in datasets.(26) This phenomenon is not technical but political; the “efficiency” criteria incorporated into the models often reflect economic values, such as maximizing GDP or protecting expensive infrastructure, to the detriment of local livelihoods.(27)

Furthermore, the standardization of algorithms clashes with the heterogeneity of ecosystems and community needs.(17,28) Research in India showed how AI systems for water management recommended dams in areas already affected by forced displacement based on hydrological metrics without considering social impacts.(29)

Climate justice requires addressing these biases through ethical evaluation frameworks that audit not only technical accuracy but also the distributional consequences of models.(30) Proposals such as participatory “impact assessments” identify which groups are rendered invisible in the data and how algorithmic outputs redistribute environmental risks.(18,31) However, most current policies lack these mechanisms, perpetuating what has been called data colonialism in climate governance.(32)

Figure 2. Consequences and limitations associated with algorithmic biases

Opacity in climate governance

The second analytical axis reveals that the opacity of AI systems in environmental policies undermines accountability and democratic participation.(33) According to the documents reviewed, this opacity operates on three levels: corporate secrecy surrounding algorithms patented by technology companies, technical complexity that prevents public scrutiny, and a lack of political will to make decision-making criteria transparent.(34) In practice, this turns predictive models into “black boxes” that legitimize decisions without allowing them to be questioned.(13) The literature points out that this opacity benefits actors with technological and economic power, as they can influence the design of systems without taking responsibility for their impacts.(34,35) Conversely, affected communities are disadvantaged regarding challenging decisions.(22,35) This exacerbates historical asymmetries, as corporations and centralized governments control AI infrastructure while local demands for transparency are criminalized or ignored.(14,24)

In response, proposals such as the right to explanation in European regulations (GDPR) or “auditable AI” standards promoted by the UN have emerged.(36) However, their implementation is in its infancy and faces resistance from technology lobbies.(15,28) Climate justice, in this context, requires not only opening up algorithms but also democratizing their governance by including actors traditionally excluded from these processes.

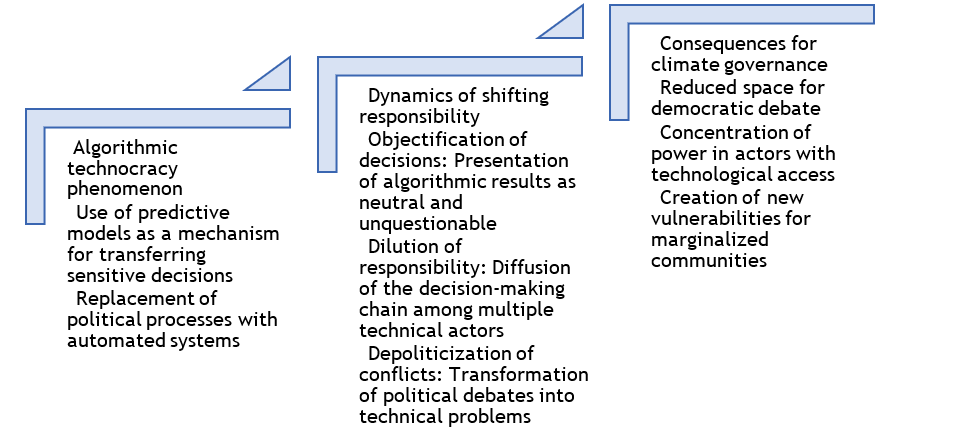

Shifting political responsibilities

The analysis revealed a growing phenomenon of algorithmic technocracy in climate governance, where AI predictive models are used to transfer politically sensitive decisions from human actors to automated systems (see figure 3).(37) This shift operates through three interrelated dynamics: the objectification of decisions, the dilution of responsibility, and the depoliticization of conflicts.(38,39)

Figure 3. Causes and consequences of the shift in political responsibilities

A prime example was the Dutch flood management system, where algorithms determined territorial protection priorities based solely on economic criteria, ignoring interregional equity considerations.(40) When rural communities protested the urban bias of the decisions, authorities claimed they were “following the data.” The literature identified this pattern as “algorithmic authority,” the tendency to accept AI results as superior to human judgment in contexts of climate uncertainty.(41)

This phenomenon poses serious risks to climate justice by reducing spaces for democratic debate on policy alternatives, concentrating power in actors with access to advanced technology, and creating new vulnerability types for communities without the capacity to audit or question predictive models.(18,42) Research in Australia showed how AI systems for allocating post-disaster resources reproduced colonial patterns of exclusion, but officials avoided taking responsibility by claiming technical limitations of the system.(43)

Exclusion of local knowledge and alternative epistemologies

The predictive models that predominate in environmental policy are based on a reductionist epistemology that systematically marginalizes forms of knowledge that cannot be quantified.(44) The document review found that the systems analyzed did not incorporate mechanisms to integrate traditional or community knowledge about ecosystem management.(3,9,14,31) This exclusion operates through four mechanisms: standardization, epistemological validation, and decontextualization (see figure 4).

Cases documented in the Amazon showed how forest monitoring algorithms ignored indigenous ecological classification systems that identified degradation patterns invisible to satellite sensors.(45) Maasai herders were excluded from drought prediction systems in Kenya because their knowledge could not be translated into the data formats required by the algorithms.(46)

This exclusion has serious practical consequences: it reduces the effectiveness of policies by ignoring locally proven knowledge, erodes the cultural rights of indigenous peoples, and reproduces epistemological colonialism in environmental governance.(9,41) The literature revealed emerging efforts to develop “intercultural AI” that combines predictive models with traditional knowledge systems.(22,28) However, these cases are exceptional despite the predominance of technocentric approaches.

Figure 4. Predictive models for environmental policies

DISCUSSION

The findings reveal that implementing predictive models in climate policy does not operate in an ethical vacuum but reproduces and amplifies pre-existing power dynamics. The supposed technical neutrality of these systems often masks value judgments that prioritize specific interests over others, particularly when training data reflects historical or geopolitical biases.(47) This poses a fundamental challenge, mainly when it affects communities already marginalized in contexts of climate vulnerability.(38,40)

A worrying finding is how these systems can erode democratic processes in environmental governance.(48) Transferring decision-making authority to opaque algorithms reduces the space for public debate on policy alternatives and accountability.(35,39) This is particularly serious when affected populations lack the technical or institutional capacity to audit the models determining access to critical resources or the distribution of environmental risks. This creates new forms of political exclusion under technocratic structures.(42) Research also highlights a deep epistemological tension between the scientific-technical knowledge incorporated into algorithms and local expertise about ecosystem management.(9,18) This gap is not merely methodological but reflects asymmetrical power relations that invalidate non-Western forms of knowledge.(49) The results suggest that the effectiveness of climate policies is compromised when systems of environmental understanding and adaptation developed over centuries by indigenous and local communities are ignored.(24) Finally, the study highlights the central paradox of relying on standardized technological solutions to address environmental crises that are, by nature, deeply contextual and unequal. While AI can offer valuable tools for climate analysis, its implementation requires ethical frameworks prioritizing equity over efficiency, participation over automation, and epistemic diversity over technological universalism.(50,51) This requires not only improving technical systems but also transforming the governance structures that determine how, by whom, and for what purpose these technologies are used.

CONCLUSIONS

This study shows that the implementation of AI predictive models in environmental policies requires a critical approach that transcends the prevailing technological optimism. The findings demonstrate that these systems, far from being neutral, reproduce and amplify structural inequalities when not accompanied by robust ethical safeguards. It concludes that the fundamental challenge lies in developing governance frameworks prioritizing climate justice over technical efficiency by ensuring algorithmic transparency, effective community participation, and recognition of local knowledge.

The research underscores the urgency of reorienting technological development toward intercultural and auditable AI models that complement—not replace—democratic processes in environmental decision-making. This requires technical improvements in predictive systems and profound transformations in the power structures that determine their design and implementation, ensuring that technological innovation truly serves socio-environmental equity.

REFERENCES

1. Wong-Parodi G, Mach K, Jagannathan K, Sjostrom K. Insights for developing effective decision support tools for environmental sustainability. Current Opinion in Environmental Sustainability 2020;42:52-59. https://doi.org/10.1016/j.cosust.2020.01.005

2. Ulibarri N, Ajibade I, Galappaththi E, Joe E, Lesnikowski A, Mach K, et al. A global assessment of policy tools to support climate adaptation. Climate Policy 2022;22(1):77-96. https://doi.org/10.1080/14693062.2021.2002251

3. Pérez Gamboa AJ, García Acevedo Y, García Batán J. Life project and university training process: an exploratory study at the University of Camagüey. Transformación 2019;15(3):280-296. http://scielo.sld.cu/scielo.php?script=sci_arttext&pid=S2077-29552019000300280

4. Shen C, Li S, Wang X, Liao Z. The effect of environmental policy tools on regional green innovation: Evidence from China. Journal of Cleaner Production 2020;254:120122. https://doi.org/10.1016/j.jclepro.2020.120122

5. Ji X, Wu G, Lin J, Zhang J, Su P. Reconsider policy allocation strategies: A review of environmental policy instruments and application of the CGE model. Journal of Environmental Management 2022;323:116176. https://doi.org/10.1016/j.jenvman.2022.116176

6. Dudkowiak A, Grajewski D, Dostatni E. Analysis of Selected IT Tools Supporting Eco-Design in the 3D CAD Environment. IEEE Access 2021;9:134945-134956. https://doi.org/10.1109/ACCESS.2021.3116469

7. Gómez-Monroy CA, Cuesta-Ramírez DH, Gómez-Cano CA. Analysis of the perception of tax on the consumption of plastic bags charged in large and medium-sized establishments from the center of Florence. LOGINN Investigación Científica y Tecnológica 2021;5(1). https://dx.doi.org/10.23850/25907441.4320

8. Wu T, Chin K, Lai Y. Applications of Intelligent Environmental IoT Detection Tools in Environmental Education of College Students. 2022 12th International Congress on Advanced Applied Informatics (IIAI-AAI) 2022:240-243. https://doi.org/10.1109/iiaiaai55812.2022.00055

9. Malik A, Tikhamarine Y, Sammen S, Abba S, Shahid S. Prediction of meteorological drought by using hybrid support vector regression optimized with HHO versus PSO algorithms. Environmental Science and Pollution Research 2021;28:39139-39158. https://doi.org/10.1007/s11356-021-13445-0

10. Brunner M, Slater L, Tallaksen L, Clark M. Challenges in modeling and predicting floods and droughts: A review. Wiley Interdisciplinary Reviews: Water 2021;8(3):e1520. https://doi.org/10.1002/wat2.1520

11. Pérez-Gamboa AJ, Gómez-Cano C, Sánchez-Castillo V. Decision making in university contexts based on knowledge management systems. Data and Metadata 2022;2:92. https://doi.org/10.56294/dm202292

12. Anshuka A, Van Ogtrop F, Vervoort W. Drought forecasting through statistical models using standardised precipitation index: a systematic review and meta-regression analysis. Natural Hazards 2019;97:955-977. https://doi.org/10.1007/s11069-019-03665-6

13. Dikshit A, Pradhan B. Interpretable and explainable AI (XAI) model for spatial drought prediction. Science of the Total Environment 2021;801:149797. https://doi.org/10.1016/j.scitotenv.2021.149797

14. Zhang R, Chen Z, Xu L, Ou C. Meteorological drought forecasting based on a statistical model with machine learning techniques in Shaanxi province, China. Science of the Total Environment 2019;665:338-346. https://doi.org/10.1016/j.scitotenv.2019.01.431

15. McGovern A, Ebert-Uphoff I, Gagne D, Bostrom A. Why we need to focus on developing ethical, responsible, and trustworthy artificial intelligence approaches for environmental science. Environmental Data Science 2022;1:e5. https://doi.org/10.1017/eds.2022.5

16. Gómez Cano CM, Valencia Arias JA. Mechanisms used to measure technological innovation capabilities in organizations: results from a bibliometric analysis. Revista Guillermo de Ockham 2020;18(1):69-79. https://doi.org/10.21500/22563202.4550

17. Coeckelbergh M. AI for climate: freedom, justice, and other ethical and political challenges. AI and Ethics 2020;1:67-72. https://doi.org/10.1007/s43681-020-00007-2

18. Osipov V, Skryl T. AI’s contribution to combating climate change and achieving environmental justice in the global economy. Frontiers in Environmental Science 2022;10:952695. https://doi.org/10.3389/fenvs.2022.952695

19. Byskov M, Hyams K. Epistemic injustice in Climate Adaptation. Ethical Theory and Moral Practice 2022;25:613-634. https://doi.org/10.1007/s10677-022-10301-z

20. Rodríguez-Torres E, Gómez-Cano C, Sánchez-Castillo V. Management information systems and their impact on business decision making. Data and Metadata 2022;1:21. https://doi.org/10.56294/dm202221

21. Verlie B. Climate justice in more-than-human worlds. Environmental Politics 2022;31(2):297-319. https://doi.org/10.1080/09644016.2021.1981081

22. Cowls J, Tsamados A, Taddeo M, Floridi L. The AI gambit: leveraging artificial intelligence to combat climate change—opportunities, challenges, and recommendations. AI & Society 2023;38:283-307. https://doi.org/10.1007/s00146-021-01294-x

23. Sardo M. Responsibility for climate justice: Political not moral. European Journal of Political Theory 2023;22(1):26-50. https://doi.org/10.1177/1474885120955148

24. Gómez-Cano C, Sánchez V, Tovar G. Endogenous factors causing irregular permanence: a reading from teaching practice. Educación y Humanismo 2018;20(35):96-112. http://dx.10.17081/eduhum.20.35.3030

25. Porter L, Rickards L, Verlie B, Bosomworth K, Moloney S, Lay B, et al. Climate Justice in a Climate Changed World. Planning Theory & Practice 2020;21(2):293-321. https://doi.org/10.1080/14649357.2020.1748959

26. Cho J, Ahmed S, Hilbert M, Liu B, Luu J. Do Search Algorithms Endanger Democracy? An Experimental Investigation of Algorithm Effects on Political Polarization. Journal of Broadcasting & Electronic Media 2020;64(2):150-172. https://doi.org/10.1080/08838151.2020.1757365

27. Boratto L, Marras M, Faralli S, Stilo G. International Workshop on Algorithmic Bias in Search and Recommendation (Bias 2020). In: Advances in Information Retrieval. Lecture Notes in Computer Science 2020;12036:637-640. https://doi.org/10.1007/978-3-030-45442-5_84

28. Gómez-Cano C, Sánchez-Castillo V. Evaluation of the maturity level in project management of a public services company. Económicas CUC 2021;42(2):133-144. https://doi.org/10.17981/econcuc.42.2.2021.Org.7

29. Zhang M, Wu S, Yu X, Wang L. Dynamic Graph Neural Networks for Sequential Recommendation. IEEE Transactions on Knowledge and Data Engineering 2023;35(5):4741-4753. https://doi.org/10.1109/TKDE.2022.3151618

30. Di W, Wu Z, Lin Y. TRR: Target-relation regulated network for sequential recommendation. PLoS ONE 2022;17(5):e0269651. https://doi.org/10.1371/journal.pone.0269651

31. Liu F, Tang R, Li X, Zhang W, Ye Y, Chen H, et al. State representation modeling for deep reinforcement learning based recommendation. Knowledge-Based Systems 2020;205:106170. https://doi.org/10.1016/j.knosys.2020.106170

32. Zhang Y, Yin G, Dong H, Zhang L. Attention-based Frequency-aware Multi-scale Network for Sequential Recommendation. Applied Soft Computing 2022;127:109349. https://doi.org/10.1016/j.asoc.2022.109349

33. Guzmán DL, Gómez-Cano C, Sánchez-Castillo V. State building through citizen participation. Revista Academia & Derecho 2022;14(25). https://doi.org/10.18041/2215-8944/academia.25.10601

34. Wellmann T, Lausch A, Andersson E, Knapp S, Cortinovis C, Jache J, et al. Remote sensing in urban planning: Contributions towards ecologically sound policies? Landscape and Urban Planning 2020;204:103921. https://doi.org/10.1016/j.landurbplan.2020.103921

35. Bacon A, Russell J. The Logic of Opacity. Philosophy and Phenomenological Research 2019;99(1):81-114. https://doi.org/10.1111/PHPR.12454

36. Sánchez Castillo V, Gómez Cano CA, Millán Rojas EE. Dynamics of operation and challenges of the peasant market Coopmercasan of Florencia (Caquetá). Investigación y Desarrollo 2020;28(2):22-56. https://doi.org/10.14482/indes.28.2.330.122

37. Zheng Y. Does bank opacity affect lending? Journal of Banking and Finance 2020;119:105900. https://doi.org/10.1016/j.jbankfin.2020.105900

38. Chin C. Business Strategy and Financial Opacity. Emerging Markets Finance and Trade 2023;59(7):1818-1834. https://doi.org/10.1080/1540496X.2022.2147779

39. Yin X, Zamani M, Liu S. On Approximate Opacity of Cyber-Physical Systems. IEEE Transactions on Automatic Control 2021;66(4):1630-1645. https://doi.org/10.1109/TAC.2020.2998733

40. Guo Y, Jiang X, Guo C, Wang S, Karoui O. Overview of Opacity in Discrete Event Systems. IEEE Access 2020;8:48731-48741. https://doi.org/10.1109/ACCESS.2020.2977992

41. Tovar-Cardozo G, Sánchez-Castillo V, Gómez-Cano CA. Tourism as an economic alternative in the municipality of Belén de los Andaquíes (Caquetá). Revista Criterios 2020;27(1):173-188. https://doi.org/10.31948/rev.criterios/27.1-art8

42. Hélouët L, Marchand H, Ricker L. Opacity with powerful attackers. IFAC-PapersOnLine 2018;51(7):464-471. https://doi.org/10.1016/J.IFACOL.2018.06.341

43. Koo K, Kim J. CEO power and firm opacity. Applied Economics Letters 2019;26(10):791-794. https://doi.org/10.1080/13504851.2018.1497841

44. Maurer M, Bogner F. Modelling environmental literacy with environmental knowledge, values and (reported) behaviour. Studies in Educational Evaluation 2020;65:100863. https://doi.org/10.1016/j.stueduc.2020.100863

45. Bind M. Causal Modeling in Environmental Health. Annual Review of Public Health 2019;40:23-43. https://doi.org/10.1146/annurev-publhealth-040218-044048

46. Wood K, Stillman R, Hilton G. Conservation in a changing world needs predictive models. Animal Conservation 2018;21(2):87-88. https://doi.org/10.1111/acv.12371

47. Cahan E, Hernandez-Boussard T, Thadaney-Israni S, Rubin D. Putting the data before the algorithm in big data addressing personalized healthcare. NPJ Digital Medicine 2019;2:78. https://doi.org/10.1038/s41746-019-0157-2

48. Urban C, Christakis M, Wüstholz V, Zhang F. Perfectly parallel fairness certification of neural networks. Proceedings of the ACM on Programming Languages 2020;4(OOPSLA):1-30. https://doi.org/10.1145/3428253

49. Wong P. Democratizing Algorithmic Fairness. Philosophy & Technology 2020;33(2):225-244. https://doi.org/10.1007/s13347-019-00355-w

50. Guayara Cuéllar CT, Millán Rojas EE, Gómez Cano CA. Design of a virtual digital literacy course for teachers at the University of Amazonia. Revista Científica 2019;(34):34-48. https://doi.org/10.14483/23448350.13314

51. Uygur O. CEO ability and corporate opacity. Global Finance Journal 2018;35:72-81. https://doi.org/10.1016/J.GFJ.2017.05.002

FUNDING

None.

CONFLICT OF INTEREST

None.

AUTHOR CONTRIBUTION

Conceptualization: Tulio Andres Clavijo Gallego.

Data curation: Tulio Andres Clavijo Gallego.

Formal analysis: Tulio Andres Clavijo Gallego.

Fund acquisition: Tulio Andres Clavijo Gallego.

Research: Tulio Andres Clavijo Gallego.

Methodology: Tulio Andres Clavijo Gallego.

Project management: Tulio Andres Clavijo Gallego.

Resources: Tulio Andres Clavijo Gallego.

Software: Tulio Andres Clavijo Gallego.

Supervision: Tulio Andres Clavijo Gallego.

Validation: Tulio Andres Clavijo Gallego.

Visualization: Tulio Andres Clavijo Gallego.

Writing – original draft: Tulio Andres Clavijo Gallego.

Writing – review and editing: Tulio Andres Clavijo Gallego.